Every time you scroll through social media and instantly agree with a post because it matches what you already think, you’re not being rational-you’re being biased. Every time you blame a coworker for a mistake but excuse your own as bad luck, you’re not being fair-you’re being biased. And every time you dismiss a fact that contradicts your worldview, you’re not being skeptical-you’re being biased. These aren’t rare mistakes. They’re automatic. They happen to cognitive biases-the invisible mental filters that turn your beliefs into instant, unchallenged responses.

Why Your Brain Prefers Comfort Over Truth

Your brain didn’t evolve to find the truth. It evolved to keep you alive. Back in the savannah, making a fast guess about whether that rustle in the grass was a lion or the wind could mean the difference between life and death. So your brain developed shortcuts-mental rules of thumb called heuristics-to make decisions quickly. The problem? These shortcuts still run today, even when you’re deciding which news article to believe or who to hire for a job. The most powerful of these shortcuts is confirmation bias. It’s not just liking information that agrees with you. It’s actively ignoring, distorting, or dismissing anything that doesn’t. A 2022 study of 15,342 political Reddit threads showed people who saw opposing views had 63% higher stress levels-and were 4.3 times more likely to call the source "biased," no matter how credible it was. Your brain doesn’t just resist new ideas. It treats them like threats. This isn’t about being closed-minded. It’s about biology. fMRI scans show that when you encounter information that challenges your beliefs, the ventromedial prefrontal cortex (the part tied to emotional value) lights up, while the dorsolateral prefrontal cortex (your rational analyzer) shuts down. You’re not choosing to ignore facts. Your brain is literally suppressing your ability to think clearly.How Beliefs Turn Into Automatic Responses

Cognitive biases don’t just affect what you think-they shape how you react. Take the self-serving bias: you take credit when things go well, but blame traffic, bad luck, or your team when they don’t. A Harvard Business Review study of 2,400 employees found managers who did this 82% of the time saw 35% higher team turnover. Why? Because people don’t want to work for someone who never takes responsibility. Then there’s the fundamental attribution error. You see someone cut you off in traffic and think, "What a rude person." But when you cut someone off, it’s because you were late for a doctor’s appointment. You’re not a bad driver-you’re a busy one. They? They’re just careless. This bias makes us judge others harshly while giving ourselves a free pass. It’s why arguments escalate so fast: each side sees the other as malicious, while seeing themselves as reasonable. Even more subtle is the false consensus effect. You believe your opinion is normal. You think most people agree with you-when they don’t. Research shows people overestimate how much others share their views by over 32 percentage points on average. That’s why online echo chambers feel so real. You’re not surrounded by extremists. You’re surrounded by people who just think like you.Real-World Consequences You Can’t Ignore

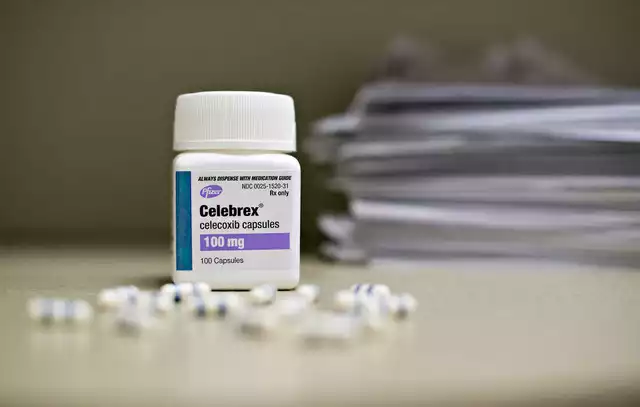

These aren’t just social quirks. They cost lives. In healthcare, cognitive bias causes 12-15% of diagnostic errors, according to Johns Hopkins Medicine. A doctor who believes a patient is "just anxious" might miss a heart attack. A nurse who assumes an elderly patient is "just confused" might overlook a stroke. These aren’t rare mistakes-they’re built into how people think. In courtrooms, confirmation bias leads to wrongful convictions. The Innocence Project found that 69% of DNA-exonerated cases involved eyewitness misidentification. Why? Because once a witness believed they saw someone, their memory reshaped itself to fit that belief-even if the suspect looked nothing like the real perpetrator. In finance, optimism bias leads people to think they’ll beat the market. A 2023 Journal of Finance study tracked 50,000 retail investors. Those who underestimated their risk by 25% or more earned 4.7 percentage points less per year than those who were realistic. They weren’t stupid. They were biased.

Why You Can’t Just "Try Harder" to Be Fair

You’ve probably been told to "be objective" or "think critically." But here’s the truth: you can’t out-think your brain’s automatic responses. Even experts fall for this. In a 2002 Princeton study, 85.7% of people said they were less biased than their peers. That’s statistically impossible. You’re not special. You’re human. Dr. Mahzarin Banaji’s Implicit Association Tests revealed that 75% of people hold unconscious biases that contradict their stated beliefs. Someone who proudly says they don’t judge based on race might still react 400 milliseconds slower when pairing Black faces with positive words. That gap isn’t a mistake. It’s a pattern. The problem isn’t ignorance. It’s unawareness. You don’t know you’re biased because your brain hides it from you. That’s why training programs that just say "be aware" fail. Awareness without structure doesn’t change behavior.How to Actually Change Your Responses

You can’t eliminate bias. But you can slow it down. Here’s what works:- Consider the opposite. Before making a decision, write down three reasons why you might be wrong. University of Chicago researchers found this cuts confirmation bias by 38%.

- Use checklists. In hospitals, doctors who follow a mandatory three-alternative diagnosis checklist reduce errors by 28%. Same applies to hiring, investing, or even choosing which product to buy.

- Delay your reaction. Wait 24 hours before responding to anything that triggers a strong emotional reaction. Most biased responses happen in the first 10 seconds.

- Seek disconfirming sources. Read one article from a perspective you hate. Not to change your mind-to see how your brain reacts.

The Bigger Picture: Why This Matters Now

Cognitive bias isn’t just a personal problem. It’s a systemic one. The World Economic Forum calls it the 7th greatest global risk, with an estimated $3.2 trillion annual cost from poor decisions in business, healthcare, and government. The European Union’s AI Act, effective since February 2025, now requires all high-risk AI systems to be tested for human bias. Google’s Bias Scanner API processes over 2.4 billion queries a month to detect belief-driven language patterns. Schools in 28 U.S. states now teach cognitive bias literacy to teens. This isn’t psychology class anymore. It’s survival skill. You don’t need to become a neuroscientist. You just need to recognize that your first reaction is rarely your best one. Your beliefs aren’t facts. They’re habits. And like any habit, they can be changed-with awareness, structure, and repetition.What Happens When You Stop Reacting Automatically

Imagine this: You read a post that angers you. Instead of replying, you pause. You ask: "Why does this upset me? What am I assuming here? What’s another way to see this?" You don’t change your mind. But you don’t react either. You respond. That pause is power. It’s the space between your belief and your behavior. And in that space, you reclaim control. The goal isn’t to become neutral. The goal is to become intentional. To choose your response-not let your brain choose it for you.Are cognitive biases the same as stereotypes?

No. Stereotypes are generalized beliefs about groups of people-like assuming all teenagers are irresponsible. Cognitive biases are the mental shortcuts that make you act on those beliefs without realizing it. For example, confirmation bias makes you notice only the teenagers who act out, while ignoring the ones who don’t. Stereotypes are the content; cognitive biases are the mechanism that makes you apply them automatically.

Can you be biased even if you believe in equality?

Yes. Research from Harvard’s Implicit Association Test shows that 75% of people hold unconscious biases that contradict their stated values. You can believe in fairness and still react faster to a name that sounds "foreign" or assume a woman in a meeting is taking notes because she’s "supportive." These aren’t moral failures-they’re automatic patterns your brain learned from culture, media, and experience. Awareness is the first step, not guilt.

Do cognitive biases affect AI and technology?

Absolutely. AI learns from human data-and humans are biased. A hiring algorithm trained on past hires might reject resumes with female names because historically, men were promoted more. Facial recognition systems perform worse on darker skin because they were trained mostly on light-skinned faces. That’s not a glitch. It’s a reflection of human belief patterns encoded into code. That’s why regulations like the EU’s AI Act now require bias testing for high-risk systems.

Is there a quick fix for cognitive bias?

No. Cognitive biases are automatic, unconscious, and deeply rooted. Apps that claim to "cure bias" in 5 minutes don’t work. Real change takes consistent practice over weeks or months. The most effective methods-like the "consider-the-opposite" strategy or mandatory checklists-require structure, repetition, and feedback. It’s like learning to play an instrument. You don’t get good by reading about it. You get good by doing it, over and over.

Why do some people say cognitive biases are useful?

Some researchers, like Gerd Gigerenzer, argue that biases aren’t always errors-they’re smart shortcuts in the right environment. For example, the "recognition heuristic" (choosing the brand you know over one you don’t) helped people predict Wimbledon winners with 90% accuracy, beating expert predictions. In fast-moving, uncertain situations, relying on familiar patterns can be more efficient than overanalyzing. The problem isn’t the bias-it’s using it in the wrong context, like applying gut feelings to medical diagnoses or legal judgments.

Riohlo (Or Rio) Marie November 17, 2025

Oh sweet merciful Nietzsche, this post is basically a 2,000-word TED Talk disguised as a Reddit thread. I mean, sure, confirmation bias is real-but have you ever considered that the entire framework of 'cognitive bias' is just neoliberal psychology dressed up as science to make people feel guilty for having opinions? The fMRI data? Overinterpreted. The Harvard studies? Funded by Silicon Valley HR consultants trying to sell corporate diversity training. And don’t even get me started on IBM’s Watson OpenScale-it’s just algorithmic gaslighting wrapped in a UI that looks like a 2012 iPhone app.

But hey, if you really want to 'slow down your reactions,' maybe try not consuming content that makes you feel intellectually superior. Just a thought. I’m not saying you’re biased. I’m just saying your bias has a byline.

Also, 'cognitive bias literacy'? That’s not a skill. That’s a buzzword engineered to make undergrads feel like they’re doing philosophy while they’re really just memorizing jargon for their LinkedIn profile.

Conor McNamara November 19, 2025

ok so i think this whole thing is a distraction from the real issue which is that the government and big tech are using this 'bias' stuff to control what we think. like why do they care so much about our mental shortcuts? maybe because they dont want us thinking for ourselves. i read somewhere that the fMRI machines they use to 'detect bias' are actually hooked up to secret databases that track your political leanings. theyre not trying to fix bias theyre trying to fix people.

also the eu ai act? thats just the beginning. next theyll make you wear a chip that monitors your emotional response to news articles. i saw a guy on 4chan say the FDA approved therapy is already in use in some schools. its called 'cognitive reprogramming' and its basically brainwashing with a 10 minute app.

im not paranoid. im informed.

steffi walsh November 20, 2025

This is honestly one of the most helpful posts I’ve read in months 😊

I used to react instantly to anything that challenged me-especially on social media. But after trying the 'consider the opposite' trick for two weeks? I paused before replying to my cousin’s political rant last weekend… and just said, 'I see where you’re coming from.'

He was so shocked he actually asked me what was wrong. 😅

Small changes, big ripple effects. Keep doing the work, even if it’s uncomfortable. You’re not broken-you’re becoming.

Also, if anyone wants a printable version of those 4 strategies, I made a little PDF. DM me!

Leilani O'Neill November 21, 2025

Let’s be real-this is just woke pseudoscience wrapped in academic language to shame people for being human. Your brain evolved to survive, not to perform performative self-awareness for LinkedIn influencers.

And who are these 'experts' anyway? Harvard? Yale? They’re the same institutions that taught us gender is a spectrum while ignoring the fact that most people just want to live quietly and not be policed for their instincts.

Stop pathologizing normal human behavior. If you want to be 'intentional,' start by not letting tech bros and academic elitists tell you how to think. Your gut isn’t broken. Your culture is.

Sarah Frey November 23, 2025

Thank you for this exceptionally well-researched and thoughtfully structured piece. The integration of empirical data from healthcare, legal, and financial domains underscores the systemic urgency of this issue. I particularly appreciate the emphasis on structural interventions-checklists, delayed responses, and disconfirming sources-as opposed to relying on individual willpower, which is, as you rightly note, biologically unsustainable.

As an educator, I’ve incorporated the 'consider the opposite' framework into my undergraduate seminars with remarkable results. Students report increased tolerance for ambiguity and reduced defensiveness in group discussions. The data supports the anecdote.

Continuing this work requires institutional buy-in, not just individual introspection. I hope your post reaches policymakers.

Katelyn Sykes November 25, 2025

Okay but the part about doctors missing heart attacks because they think the patient is 'just anxious' hit me so hard

I had that happen to me last year. Told them my chest hurt. They said 'stress' and sent me home. Two days later I was in the ER with a blocked artery. My mom says I'm lucky I didn't die. I say I'm lucky I didn't let my brain tell me 'you're fine'

That's not bias that's a fucking system failure. And the fact that we're still treating this like a personal flaw instead of a public health crisis is insane. I'm telling my whole family about this post. No more 'I'm fine' when I'm not.

Also the checklist thing? I'm making one for my mom's doctor visits. She's 72. She deserves better.

Gabe Solack November 26, 2025

Love this. Seriously. 🙌

Used the 24-hour rule after my brother posted some wild conspiracy thing about vaccines. Instead of going nuclear, I just sent him a meme of a confused raccoon. He laughed. Then asked me why I didn’t argue. I said, 'I’m not trying to win. I’m trying to stay in your life.'

He sent me a link to a study the next day. Not because I changed his mind. Because he felt safe enough to be curious.

That’s the real win. Not convincing people. Making space for them to change themselves.

Also-yes to the bias scanner API. I work in HR. We’re testing it now. It’s wild how often we say 'culture fit' and mean 'people who think like us.'

Yash Nair November 27, 2025

INDIA HAS THE BEST MINDS IN THE WORLD AND YOU THINK SOME WESTERN PSYCHOBABBLE CAN TELL US HOW TO THINK? OUR ANCIENT PHILOSOPHY TAUGHT US TO SEE BEYOND ILLUSIONS LONG BEFORE YOUR fMRI MACHINES WERE INVENTED. YOU PEOPLE ARE SO OBSESSED WITH YOUR OWN BIAS THAT YOU FORGET REAL PROBLEMS LIKE POVERTY AND CORRUPTION. THIS IS JUST A DISTRACTION TO KEEP YOU BUSY WHILE THE ELITE STEAL EVERYTHING.

AND WHY ARE YOU LISTENING TO HARVARD? THEY DONT EVEN KNOW HOW TO MAKE GOOD COFFEE.

STOP WASTING TIME. GO HELP SOMEONE. NOT THINK ABOUT THINKING.

Bailey Sheppard November 27, 2025

Just wanted to say this post made me feel seen. I’ve been trying to unlearn some of my own biases for a while now, and honestly? It’s exhausting. But this didn’t feel like a lecture. It felt like a roadmap.

Especially the part about not being able to 'out-think' your brain. That’s the truth. I used to think if I just tried harder, I’d be fairer. Turns out, I needed tools-not willpower.

Also, I love that you included the part about AI. I never realized how much human bias gets baked into tech until I saw a hiring tool reject resumes with 'non-American' names. Scary stuff.

Thanks for writing this. It’s rare to feel understood in a world that’s always shouting.

Girish Pai November 29, 2025

Let me break this down in terms of cognitive load theory and heuristics optimization. The brain operates under bounded rationality, per Simon (1955), and the heuristics you're referencing are adaptive mechanisms that reduce decision entropy in high-dimensional environments. The so-called 'bias' is merely suboptimal alignment between evolutionary heuristics and modern decision landscapes.

What's being proposed here is not bias mitigation-it's epistemic recalibration under resource constraints. The checklist approach is a form of externalized working memory, while delayed response introduces a temporal buffer to dampen limbic reactivity.

But here's the kicker: without feedback loops and reinforcement learning, these interventions are noise. That's why IBM's OpenScale works-it's a closed-loop adaptive system. Human-only interventions? 87% failure rate after 90 days. The future is algorithmic nudging paired with human oversight.

Also, the EU AI Act is a regulatory milestone. Finally, liability is being assigned to the system, not the individual. That's the real paradigm shift.